How I designed a re-engagement configuration to help martial arts school owners recover stalled conversations through context-aware AI messaging.

Role

Lead product designer

Time & company

5 months | MyStudio

Collaboration

Front-end devs, Prompt AI engineers, QA

Context

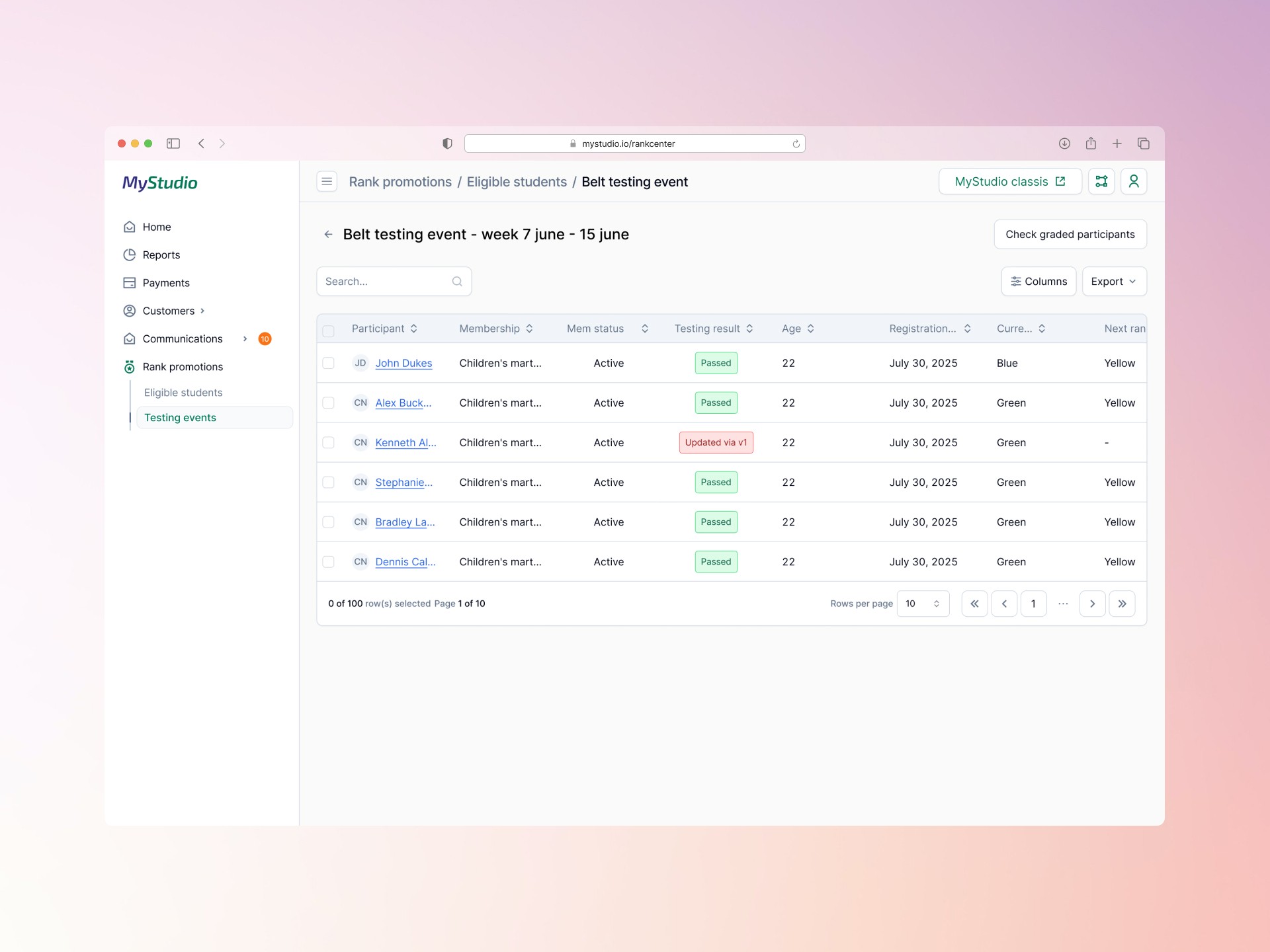

Mystudio is a sales enablement and membership management platform designed specifically for martial arts studios.

Within this ecosystem, Amplify is a core feature that allows owners to create human-like ai agents. These agents act as immediate responders that interact with leads via text to book trials, schedule first class appointments, and sell events or memberships.

Studio owners can deploy multiple agents, each specialized in a specific service and powered by the studio's unique knowledge base to ensure accurate and helpful guidance.

Problem

Amplify didn’t follow up when leads stopped replying.

Only about 30% of leads replied at first. When leads went quiet, Amplify didn’t follow up, which meant studios were missing a large share of potential sales.

As one studio owner said:

“If someone doesn’t reply, we usually follow up. The AI didn’t.”

The challenge was adding follow-ups in a way that improved conversions without making messages feel spammy or hard to manage.

Decisions and tradeoffs

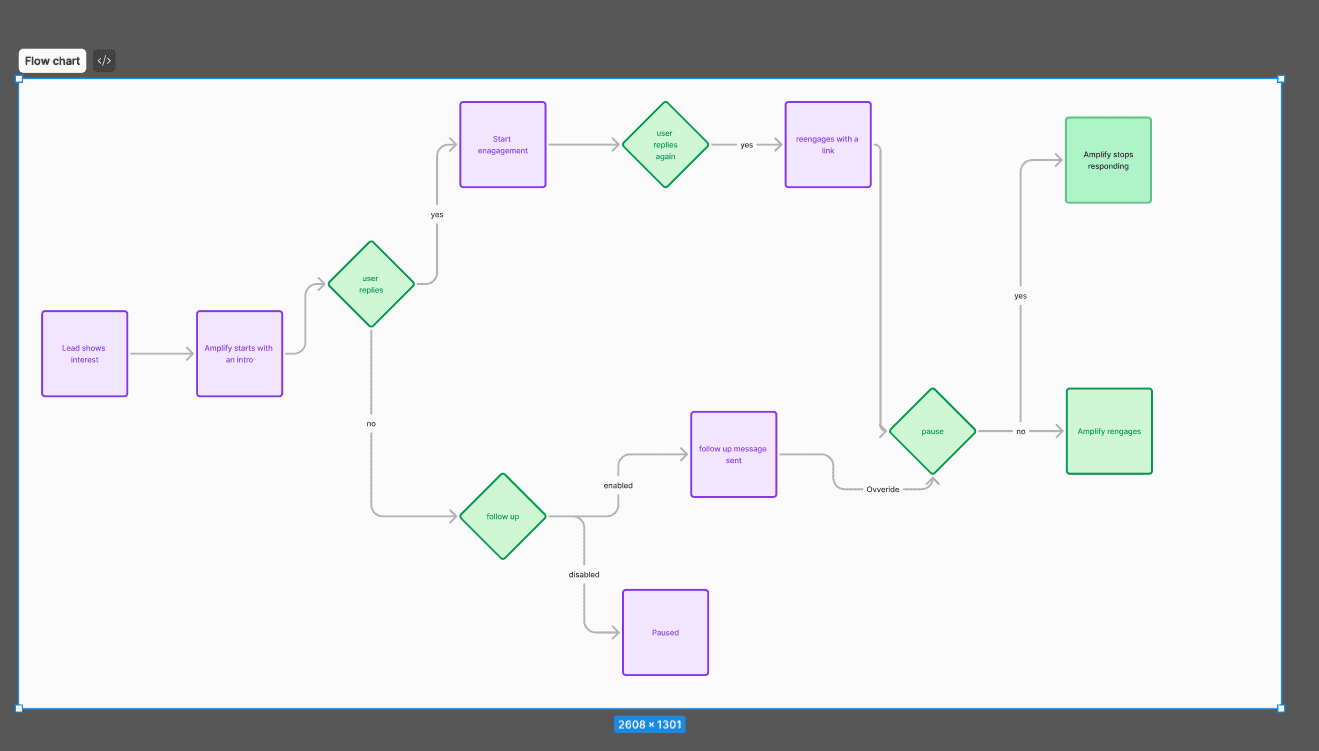

We added follow-up automation per agent.

Decision:

We let each AI agent follow up when a lead stopped replying. Follow-ups were set up per agent instead of applying one rule to everything.

Tradeoffs:

This took more setup and engineering work, but kept things clear and flexible.

Constraints:

Different agents sell different services (trials, memberships, events), so one global rule wouldn’t fit all cases.

Result:

Each agent followed up in a way that made sense for what it was selling.

We gave studio owners control, with defaults and guardrails.

Decision:

Studio owners could control follow-up timing and frequency, with built-in defaults, quiet hours, and automatic time-zone handling.

Tradeoffs:

By adding guardrails, the system avoided bad behavior → but users couldn’t override limits in special edge cases.

Constraints:

We couldn’t allow fine-grained timing controls (by minutes or hours) because overly frequent messages could trigger spam detection and cause our messaging provider to block campaigns.

Result:

The automation were easy to turn on and behaved in a predictable way.

We added sample content so the AI knows what to say

Decision:

Leads go quiet for different reasons, so we grouped common situations and let users add example messages for each one. The AI used these examples to guide what follow-up message to send in each case.

Tradeoffs:

This required some user input but avoided one-size-fits-all messages.

Constraints:

Silence doesn’t give the AI enough intent on its own, and generic follow-ups performed poorly.

Result:

Follow-ups matched the situation instead of repeating the same message.

Outcome

4× higher lead conversion

Conversations without follow-ups converted at ~7%, while conversations with a single Amplify follow-up converted at ~28%.

+131 additional trial signups

Automated follow-ups generated 131 extra trial signups that would not have happened otherwise.

Fewer leads dropped after first contact

Follow-ups kept conversations active instead of ending after the first unanswered message.

Less manual effort for studios

Studios no longer needed to manually chase silent leads to maintain engagement.

Solution

In current discovery, we’re seeing that most users aren’t changing the sample content for each situation, which leads to generic follow-up messages.

I questioned whether asking users to write this much content was sustainable and explored using the knowledge base instead. However, follow-ups rely on situation-based logic, and using the knowledge base would require new logic and additional development, since it isn’t designed for follow-up scenarios.

We’re now exploring ways to reduce setup effort without lowering message quality or forcing the AI to guess.

Behind the scenes

How this was built and shipped

I worked closely with the product owner to define what follow-up automation should and should not do, especially around user control, safety, and setup effort.

I partnered with the LLM developer to understand model constraints around intent, inference, and required inputs. Those discussions directly shaped decisions around behavioral context, sample guidance, and removing guesswork from AI responses.

I collaborated with backend engineers to align on scheduling logic, time-zone handling, quiet hours, and limits, ensuring the system behaved consistently and predictably.

With frontend engineers, I iterated on how controls, defaults, and explanations showed up in the UI so users could understand automation behavior without reading documentation.

Several decisions changed mid-way based on usage data and internal reviews. Instead of locking the design early, we adjusted scope and behavior to balance adoption, message quality, and system reliability.